"The Ethics of AI: Exploring The Moral Implications"

W

elcome to our journey into the world of Artificial Intelligence (AI), a land where machines

think, learn, and sometimes even outsmart us. It’s a place full of wonders, but also some really big questions. In this blog, we’re going to chat about two of these big questions: how to make sure AI is fair and not biased, and how to keep our private stuff private in an AI world.

Context

Think about AI like a super-smart kid in class. This kid is so smart that they can solve tough math problems, predict the weather, and even recommend which movie you should watch next. But what if this kid only listened to a few people and ignored the rest? What if they only learned from one type of book? That's kind of what happens when AI isn't taught to be fair.

AI learns from the information we feed it, kind of like how we learn from books, teachers, and our experiences. But sometimes, the information AI learns from isn't fair or complete. It might be missing stories from some people, or it might have old ideas that we don’t think are right anymore. When that happens, AI can make decisions that aren't fair to everyone. As a result, algorithms perform actions that may have not been originally intended, thus putting at risk loads of businesses using these technologies (that aren't careful).

Then there’s the big issue of privacy. AI is like a detective that's really good at finding clues and putting them together to solve mysteries. It can look at all the things we do online – the things we buy, the posts we like, the places we visit – and learn a lot about us. This can be super helpful, like when it suggests a new song we end up loving. But it can also feel like someone is peeking into our personal diary. We need to make sure AI uses its detective skills in a way that doesn’t make us uncomfortable or invade our privacy.

What We'll Review

In this blog, we won't be talking about how AI might change society or jobs. Instead, we’re focusing on the stuff that affects us personally. How do we make sure the AI in our phones, cars, and computers is playing by the rules? How do we keep our secrets safe in an AI world?

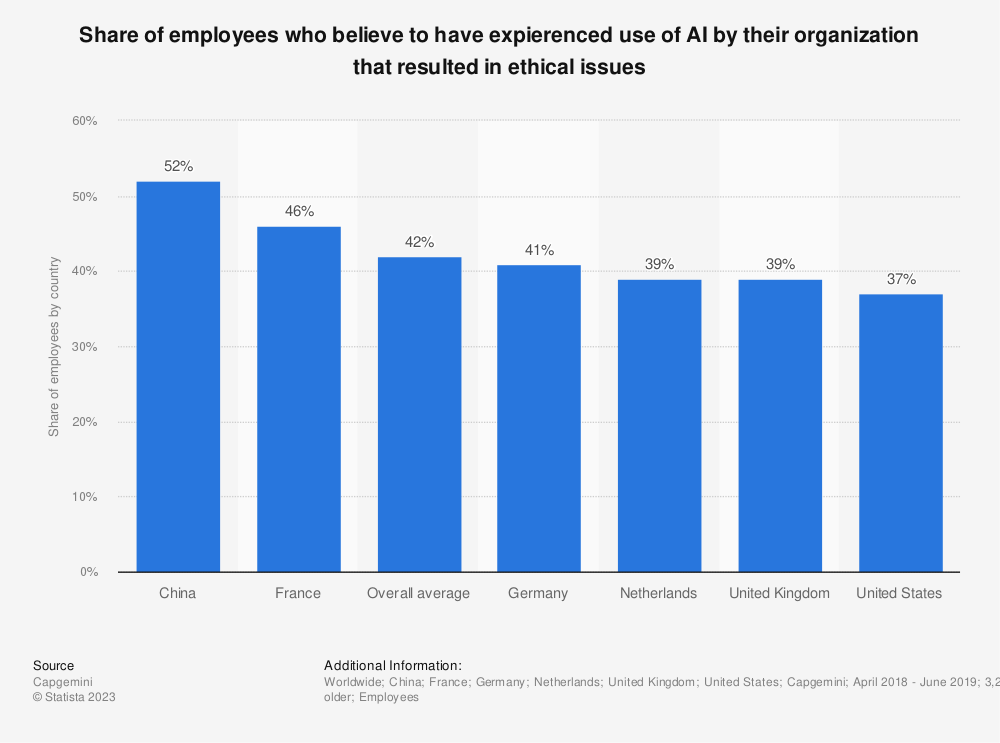

"Share of employees who believe to have experienced use of AI by their organization that resulted in ethical issues"

AI and Its Learning Habits

Imagine you're teaching someone to recognize animals. If you only show them pictures of dogs and never cats, they're going to think all four-legged furry creatures are dogs. That's a bit like what happens with AI. AI learns from data – tons of it. But if this data has a lot of one thing and not enough of another, AI gets a skewed view of the world.

For example, let’s say we're creating an AI system to review job applications. If most of the applications it learns from come from one specific group of people, it might start thinking those are the only good candidates. This isn't AI being deliberately unfair; it's just working with what it's been given. But the result? A biased AI.

The Ripple Effect

The decisions AI makes based on biased learning can have a big ripple effect. It’s not just about recommending which movie to watch next. We're talking about things like who gets a loan, who's offered a job, and even more serious stuff like who gets flagged as a risk by law enforcement. When AI gets these things wrong because of bias, it can really impact people's lives.

Take for example someone looking for a job at an accounting firm. They may be the perfect fit, have years of experience, and even have a degree to back it up. However, they are rejected not because of the things that they could control, but instead for the characteristics that they couldn't control (includes race, ethnicity, background, etc.)

Teaching AI to Play Fair

So, how do we teach AI to be fair? First, it's about the data. We need to give AI a balanced view of the world. It's like making sure our AI student reads books from all over the globe, not just one place. We need to feed it data from all sorts of people – different ages, backgrounds, cultures, and lifestyles. This helps AI learn about the diverse world we live in.

But there’s more to it than just the data. We also need to constantly check on AI, like a teacher checks a student’s homework. We need to look at the decisions AI is making and ask, “Does this seem fair?” If it doesn't, it's back to the drawing board. We tweak and adjust until AI starts making decisions that are fair and just.

A Community Effort

Making AI fair isn’t something we can leave up to the techies alone. It's a community effort. We need people from all walks of life involved in AI development – different genders, races, cultures, and backgrounds. When you have a diverse group of people working on AI, they bring different perspectives. They can spot potential biases that others might miss.

Implication #1: Bias

Implication #2: Privacy

Imagine living in a world where everything you do, every choice you make, and every preference you have is quietly observed and noted. That's essentially the world we're stepping into with the advancement of Artificial Intelligence. AI, with its incredible ability to sift through mountains of data and discern patterns, can be immensely helpful. But it also raises some serious questions about privacy. How much should AI know about us, and who gets to decide what's done with that information?

The Super-Genius Who Remembers Everything

AI is all about gathering and analyzing data. It's like it has a giant scrapbook of all the things we do online – what we buy, the places we go, the posts we like, and even the photos we share.

Every time we browse the internet, shop online, interact on social media, or even just carry our smartphones around, we leave behind digital footprints. These footprints are like tiny breadcrumbs that AI uses to understand us better.

In many ways, this can be fantastic. It helps AI tailor our online experience, recommending products we'll like, content that will engage us, and even friends we might want to connect with.

But as these footprints accumulate, they form a detailed picture of our personal lives. The question then becomes: who gets to see and use this picture?

Where's the Line?

In the world of artificial intelligence, determining the extent to which it should delve into our personal lives can be likened to etching lines in the ever-shifting sands. Each day, as we go about our digital activities, whether it's making online purchases, engaging with social media, or conducting web searches, we unwittingly unveil fragments of our unique stories. It's similar to jotting down thoughts in a diary, although a diary that isn't securely locked away.

Undeniably, these fragments of information play an invaluable role in enhancing our AI-driven experiences, offering tailored movie suggestions, restaurant recommendations, and personalized interactions. Yet, there's a pivotal warning– not every page of our digital diary should be laid bare for all to peruse. It's our narrative, and we should have the privilege of determining who gains access.

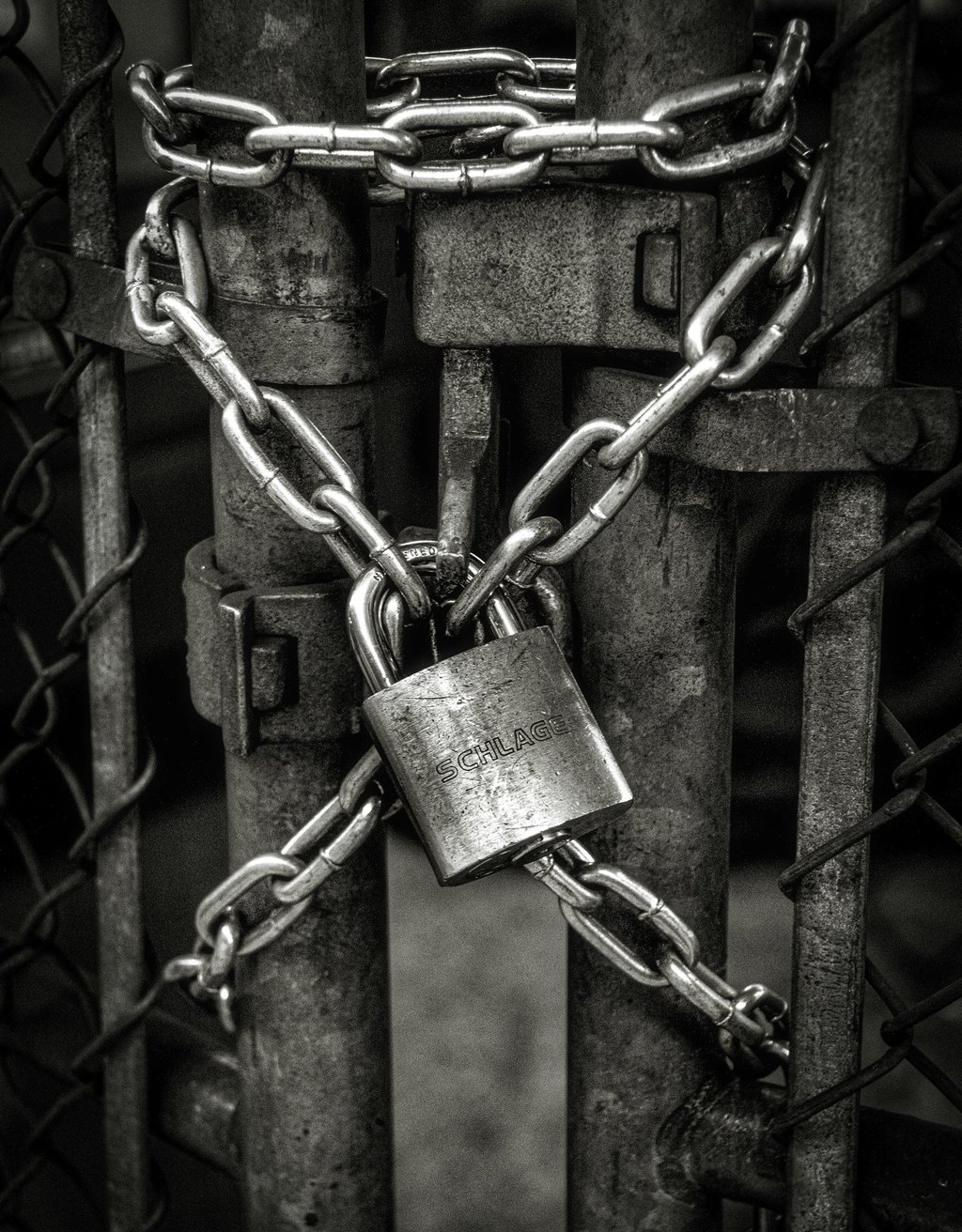

Keeping Our Secrets Safe

So, how do we ensure the protection of our digital secrets? Imagine it as placing a fortress-like lock on that diary. Companies harnessing the capabilities of AI must not be granted unrestricted entry into our digital realms. Instead, we require well-defined rules and protocols, akin to a polite knock on the door, asking for our explicit consent before they can delve into our personal data.

Transparency emerges as a cornerstone in this AI-centric era, requiring companies to communicate openly about their motives concerning our data. Are they utilizing it solely to enhance their services for our benefit, or do they have plans to share it with external organizations? Above all, our data should remain confidential and immune to distribution without our explicit authorization.

The Importance of Strong Digital Locks

Just like we lock our front doors to keep out unwanted visitors, the digital world needs strong protection to keep our valuable data safe. Making sure that the technology keeping our digital stuff secure is really top-notch is super important. We definitely don't want any sneaky digital thieves getting into our online spaces and taking our personal info.

In today's world, where we're all pretty much living our lives online, having good security for our data is a big deal. It's not just about keeping the bad guys out; it's about making sure they can't break through the walls of our digital homes. Our tech needs to be up to the task because, let's be honest, no one wants their private stuff falling into the wrong hands. So, just like we lock our front doors, we've got to lock down our digital lives to keep everything safe and sound.

What Happens When the Locks Break?

But what happens when, despite our best efforts, the locks break? In today's world, where cyberattacks are unfortunately common, data breaches can happen even when we've done our best to protect ourselves. Whether it's a clever hacker finding a weakness or an accidental leak of sensitive info, the consequences can be pretty serious.

So, when these tough moments occur, how companies respond is crucial. They need to be honest about what went wrong, just like a friend who accidentally spills your secrets. Being upfront and taking responsibility are key.

Next, they should communicate quickly and openly. Just as a good friend would tell you right away what happened, companies should let the people affected know about the breach and tell the public what's going on. Being transparent isn't just about revealing the problem's size; it's also about showing how they plan to fix it.

And finally, they need to show they're committed to preventing it from happening again. Like a trusted friend assuring you they won't let your secret slip again, companies should explain the concrete steps they're taking to boost their security. This might mean beefing up their defenses, checking everything thoroughly, and putting strict rules in place.

"Lock" Images - Unsplash.com